This post is the “amplified” version of my presentation at ALTC 2015. The presentation summarises the main arguments that are made in more detail in this post.

So let’s look at the 2015 Google Moonshot Summit for Education. No particular reason that I start here rather than anywhere else, other than the existence of Martin Hamilton’s succinct summing up on the Jisc blog. Here’s the list of “moonshot” ideas that the summit generated – taken together these constitute the “reboot” that education apparently needs:

- Gamifying the curriculum – real problems are generated by institutions or companies, then transformed into playful learning milestones that once attained grant relevant rewards.

- Dissolving the wall between schools and community by including young people and outsiders such as artists and companies in curriculum design.

- Creating a platform where students could develop their own learning content and share it, perhaps like a junior edX.

- Crowdsourcing potential problems and solutions in conjunction with teachers and schools.

- A new holistic approach to education and assessment, based on knowledge co-construction by peers working together.

- Creating a global learning community for teachers, blending aspects of the likes of LinkedIn, and the Khan Academy.

- Extending Google’s 20% time concept into the classroom, in particular with curriculum co-creation including students, teachers and the community.

“Gamification”, a couple of “it’s X… for Y!” platforms and crowd-sourcing. And Google’s “20% time” – which is no longer a thing at Google. Take away the references to particular comparators and a similar list could have been published at any time during my career.

The “future of education technology” as predicted has, I would argue, remained largely static for 11 years. This post represents an exploration concerning why this is the case, and makes some limited suggestions as to what we might do about it.

Methodological issues

Earlier in 2015 I circulated a table of NMC Horizon Scan “important developments in educational technology” for HE between 2004 and 2015, which showed common themes constantly re-emerging. The NMC are both admirably public about their methods and clear that each report represents a “snapshot” with no claims to build towards a longitudinal analysis.

Methodologically, the NMC invite a bunch of expert commentators into a (virtual, and then actual) room, and ask them to sift through notable developments and articles to find overarching themes, which are then refined across two rounds of voting. There’s an emphasis on the judgement of the people in the room over and above any claims of data modelling, and (to me at least) the reports generally reflect the main topics of conversation in education technology over the recent past.

Though it is both fun and enjoyable to criticise the NMC Horizon Scan (and the list is long and distinguished) I’m not about to write another chapter of that book. The NMC Horizon Scan represents one way of performing a horizon scan – we need to look at it against others.

The only other organisation I know of that produces annual edtech predictions at a comparable scale are Gartner, with the Education Hype Cycle (pre-2008 the Higher Education Hype Cycle, itself an interesting shift in focus.) Gartner have a proprietary methodology, but drawing on Fenn and Raskino’s (2008) “Mastering The Hype Cycle” it is possible to get a sense both of the underlying idea and the process involved.

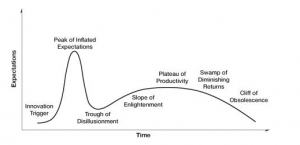

The Hype Cycle concept, as I’ve described before, is the bluntest of blunt instruments. A thing is launched, people get over-excited, there’s a backlash and then the real benefit of said thing is identified, and a plateau is reached. Fenn and Raskino describe two later components of this cycle, the Swamp of Diminishing Returns and the Cliff of Obsolescence, though these are seldom used in the predictive cycles we are used to seeing online (used in breach of their commercial license.)

Of course not everything moves smoothly through these stages, and as fans of MOOCs will recall it is entirely possible to be obsolete before the plateau. As fans of MOOCs will also recall, it is equally possible for an innovation to appear fully-formed at the top of the Peak of Inflated Expectation without any prior warning.

In preparing Hype Cycles Gartner lean heavily on a range of market signals including reports, sales and investments – and this is supplemented by the experience of Gartner analysts who in working closely with Gartner clients are well placed to identify emerging technologies. But the process skews data driven, whereas the NMC skews towards expertise. (There’s a University of Minnesota crowd-sourced version based on “expertise” that looks quite different. You can go and add your voice if you like.)

How else can we make predictions about the future of education technology? You’d think there would be a “big data” or “AI” player in this predictions marketplace, but other than someone like Edsurge or Ambient Insight extrapolating from investment data. Other than the obvious Google Trends looking at search query volumes, it appears that meaningful “big data” edtech predictions are themselves a few years in the future. (or maybe no big data shops are confident enough to make public predictions…)

A final way of making predictions about the future would be to attend (or follow remotely) a conference like #altc. It could be argued that much of the work presented at this conference are generally “bleeding edge” experimentation and that themes within papers could serve as a prediction of the mainstream innovations of the following year(s).

Why is prediction important?

Neoclassical economists would argue that the market is best suited to process and rate innovations, but reality is seldom as elegant as neoclassical economics.

Within our market-focused society, predictions could allow us to steal an advantage on the workings of the market and thus allow us to make a profit as the information we bring from our predictions is absorbed. As all of the predictions I discuss above are either actually or effectively open to all, this appears to be a moot point, as an efficient market would quickly “price in” such predictions. So the paradox here is that predictions are more likely to influence the overall direction of the market than offer any genuine first-order financial benefit.

As Philip Mirowski describes in his (excellent) “Never let a serious crisis go to waste” (2014): “It has been known for more than 40 years and is one of the main implications of Eugene Fama’s “efficient-market hypothesis” (EMH), which states that the price of a financial asset reflects all relevant, generally available information. If an economist had a formula that could reliably forecast crises a week in advance, say, then that formula would become part of generally available information and prices would fall a week earlier.”

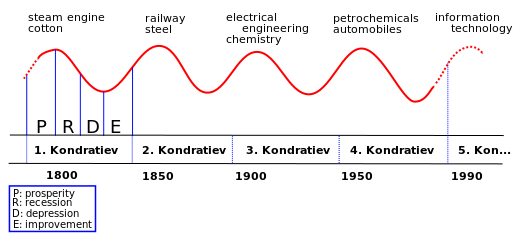

Waveform predictions – like the hype cycle, and Paul Mason‘s suddenly fashionable Kondratieff Waves (or Schumpter’s specific extrapolation of the idea of an exogenous economic cycle to innovation as a driver of long-form waves: Kondratieff describes the waves as an endogenous economic phenomenon…) – can be seen as tools to allow individuals to make their own extrapolations from postulated (I hesitate to say “identified”) waves in data.

(Kondratieff Waves, Rursus@wikimedia, cc-by-sa)

In contrast, Gartner’s published cycles say almost nothing useable about timescales, with each tracked innovation proceeding through the cycle (or not!) at an individual pace. In both cases, the idea of the wave itself could be seen as a comforting assurance that a rise follows a fall – by omitting the final two stagnatory phases of the in-house Gartner cycle it appears that every innovation eventually becomes a success.

But there is no empirical reason to assume waves in innovation such as these are anything other than an artefact (and thus a “priced-in” component) of market expectations.

Two worked examples

I chose to examine five potential “predictive” signals across up to 11 years of data (where available) for two “future of edtech” perennials: Mobile Learning and Games for Learning. I don’t claim any great knowledge of either of these fields, either historically or currently, which makes it easier for me to look at predictive patterns rather than predictive accuracy.

My hypothesis here is that there should be some recognisable relationship between these five sources of historic prediction.

Games in Education

| Year | NMC | Gartner | ALT-C | Ambient Insights | |

| (notes) | (Placement on “technologies to watch” HE horizon scan) | (placement on education hype cycle) | (conference presentations, “gam*”) | (tracked venture capital investment level) | (trends, “Games in education” level at mid-year & direction) |

| 2004 | Unplaced | Unplaced | 1 presentation | – | 68 (falling slightly) |

| 2005 | 2-3yrs #2 | Unplaced | 2 presentations | – | 37 (falling) |

| 2006 | 2-3yrs #2 | Unplaced | 5 presentations | – | 36 (stable) |

| 2007 | 4-5 #1

(massively multiplayer educational gaming) |

Unplaced | 4 presentations | – | 30 (stable) |

| 2008 | Unplaced | Unplaced | 5 presentations | – | 42 (rising) |

| 2009 | Unplaced | Unplaced | 3 presentations | – | 41 (rising) |

| 2010 | Unplaced

(also not on shortlist) |

Unplaced | 3 presentations | $20-25m | 55 (rising) |

| 2011 | 2-3yrs #1 | Peak (2/10) | 1 presentation | $20-25m | 70 (rising) |

| 2012 | 2-3yrs #1 | Peak (2/8) | 2/231 presentations | $20-25m (appears to have been revised down from $50m) | 58 (sharp decline – 80 at start of year) |

| 2013 | 2-3yrs #1 | Peak (4/9) | 1/149 presentations

1 SIG meeting |

$55-60m | 46 (steady, slight fall) |

| 2014 | 2-3yrs #2 | Trough (8/18) | 0/138 presentations

1 SIG meeting |

$35-40m | 40 (steady, slight fall) |

| 2015 | N/A (was 2-3yrs trend 3) | Trough (11/14) | 4/170 presentations | n/a | 33 (falling) |

We see a distributed three year peak between 2011 and 2013 with NMC, Gartner and investors in broad agreement around a peak. Google also trends hits the start of this peak before dropping sharply.

Interestingly, games for learning never became a huge theme at ALTC (until maybe this year #altcgame), but there is some evidence of an earlier interest between 2006-8, which is mirrored by a shallower NMC peak.

Mobile Learning

| Year | NMC | Gartner | ALT-C | AmbInsight | |

| (notes) | (word “phone” or “mobile”) | (word “phone” or “mobile”) | (search on session titles – “phone” or “mobile”) | Data available from 2010 only. | “Mobile Learning” – mid year value and description |

| 2004 | Unplaced | Unplaced | 1 presentation | – | 50 (steady) |

| 2005 | Unplaced | Unplaced | 4 presentations | – | 36 (choppy, large peak in May) |

| 2006 | “The Phones in Their Pockets”

#1 2-3yr |

Unplaced | 7 presentations | – | 42 (steady, climb to end of year) |

| 2007 | “Mobile Phones”

#1 2-3yr |

Unplaced | 10 presentations | – | 57 (fall in early year, rise later) |

| 2008 | “Mobile broadband”

#1 2-3yr |

Unplaced | 6 presentations | – | 56 (steady, peak in May) |

| 2009 | “Mobiles”

#2 1-2yr |

“Mobile Learning”

Rise 10/11 (low end) 11/11 Smart |

11 presentations | – | 56 (steady) |

| 2010 | “Mobile Computing”

#2 1-2yr |

“Mobile Learning”

Peak 1/10 (low end) & 8/10 (smart) |

8 presentations | $175m | 81 (rising) |

| 2011 | “Mobiles”

#2 1-2yr |

“Mobile learning”

Peak 5/10 (LE) Trough 4/12 (smart) |

8 presentations | $150m | 94 (peak in June) |

| 2012 | “Mobile Apps”

#1 1-2yr |

“Mobile Learning”

Trough 1/15 (LE) 8/15 (Smart) |

12 presentations | $250m[1] | 81 (steady) |

| 2013 | Unplaced | “Mobile Learning”

Trough 3/14 (LE) 10/14 (Smart) |

4 presentations | $200m | 70 (falling) |

| 2014 | Unplaced | “Mobile Learning”

Slope 5/13 (LE) Trough 17/18 (Smart) |

5 presentations | $250m | 64 (falling) |

| 2015 | “BYOD”

#1 1-2yr |

“Mobile Learning”

Slope 1/12 (Smart) |

2 presentations | n/a | 59 (steady, fall in summer) |

Similarly, a three year peak (2010-12) is visible, this time with the NMC, Gartner and Google Trends in general agreement. The investment peak immediately follows, and ALTC interest also peaks in 2012.

Again there is early interest (2007-2009) at ALT-C before the main peak, and this is matched by a lower NMC peak. Mobile learning has persisted as a low-level trend at ALT throughout the 11 year sample period.

Discussion and further examples

A great deal more analysis remains to be performed, but it does appear that we can trace some synergies between key predictive approaches. Of particular interest is the pre-prediction wave noticeable in NMC predictions and correlating with conference papers at ALTC – this could be of genuine use in making future predictions.

Augmented reality fits this trend, showing two distinct NMC peaks (a lower one in 2006-7, a higher one in 2010-11). But ALTC presentations were late to arrive, accompanying only the latter peak alongside Google Trends, though CETIS conference attendees would have been (as usual) ahead of this curve. Investment still lags a fair way behind.

“Open Content” first appears on the NMC technology scan in 2010 and has yet to appear on the Gartner Hype cycle, despite appearing in ALT presentations since 2008, and appearing a a significant wider trend – possibly the last great global education technology mega-trend -substantially before then. The earlier peak in “reusable learning objects” may form a part of the same trend, but what little available data from the 90s is not directly comparable.

(MOOCs could perhaps be seen as the “investor friendly” iteration of open education, and for Gartner, at least, peak in 2012, falling towards 2014 and disappear entirely in 2015. They appear only once for the NMC, in 2013. ALT-C gets there in 2011 (though North American conferences were there, after a fashion, right from 2008), and there are 10 papers at this year’s conference. Venture Capital investment in MOOCs continues to flow as providers return for multiple “rounds”. I’m seeing MOOCs as a different species of “thing”, and will return to them later)

One possible map of causality could show the initial innovation influencing conference presentations (including ALTC), which would raise profile with NMC “experts”. A lull after this initial interest would allow Gartner and then investors to catch up, which would produce the bubble of interest shown in the Google Trends Chart.

Waveform analysis

A pattern as identified above is useful, but does not address our central issue regarding why the future is so dull. Why are ideas and concepts repeating themselves across multiple iterations?

Waves with peaks of differing amplitudes are likely to be multi-component – involving the super-position of numerous sinusoidal patterns. Whilst I would hesitate to bring hard physics into a model of a social phenomenon such as market prediction, it makes sense to examine the possibility of underlying trends influencing the amplitude of predictive waves of a given frequency.

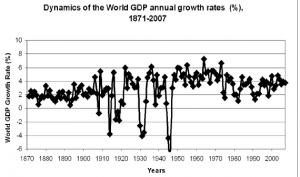

Korotayev and Tsirel (2010) use a spectral analysis technique to identify underlying waveforms in complex variables around national GDP, noting amongst other interesting findings the persistence of the Kuznets swing as a third harmonic (a peak with approximately three times the frequency) of the Kondratieff Cycle. This relationship can be used to argue for a direct relationship between the two – mildly useful in economic prediction. To be honest it looks more like smoothing artefacts, and the raw data is inconclusive.

(fig 1 from “Spectral Analysis of World GDP Dynamics”)

If we examine waves within predictions themselves, rather than dealing with an open, multi-variable system it may be more helpful to start from the basis that we are examining a closed system. By assuming this I am postulating that predictions of innovations are self reinforcing and can, if correctly spaced, produce a positive feedback effect. I’m also avoiding getting into “full cost” for the moment.

“Re-hyping” existing innovations – as is seen in numerous places even just examining NMC patterns, could be seen as a way of deliberately introducing positive feedback to amplify a slow recovery from the trough of the hype-cycle. For those who had invested heavily in an initial innovation that does not appear likely to gain mass adoption, a relaunch may be the only way of recouping this initial outlay.

Within a closed system, this effect will be seen as recurrent waves with rising amplitudes.

Conclusion

I’ve attempted to answer two questions, and have – in the grand tradition of social sciences research – been able to present limited answers to both.

Do predictions themselves show periodicity?

Yes, though a great deal of further analysis is required to identify this periodicity for a given field of interest, and to reach an appropriate level of fidelity for metaprediction.

Has something gone wrong with the future of education technology?

Yes.

If we see prediction within a neoliberal society as an attempt to shape rather than beat the market, there is limited but interesting evidence to suggest that the amplification of “repeat predictions” is a more reliable way of achieving this effect than feeding in new data. But this necessitates a perpetual future to match a perpetual present. Artificial intelligence, for example, is always just around the corner. Quantum computing has sat forlornly on the slope of enlightenment for the past nine years!

Paul Mason – in “post-capitalism” (2015)- is startlingly upbeat about the affordances of data-driven prediction. As he notes “This [prediction], rather than the meticulous planning of the cyber-Stalinists, is what a postcapitalist state would use petaflop-level computing for. And once we had reliable predictions, we could act.”

Mirowski is bleaker (remember, that these writers are talking about the entire basis of our economy, not just the ed-tech bubble!): “The forecasting skill of economists is on average about as good as uninformed guessing.

- Predictions by the Council of Economic Advisors, Federal Reserve Board, and Congressional Budget Office were often worse than random.

- Economists have proven repeatedly that they cannot predict the turning points in the economy.

- No specific economic forecasters consistently lead the pack in accuracy.

- No economic forecaster has consistently higher forecasting skills predicting any particular economic statistic.

- Consensus forecasts do not improve accuracy (although the press loves them. So much for the wisdom of crowds).

- Finally, there’s no evidence that economic forecasting has improved in recent decades.”

This is for a simple “higher or lower” style directional prediction, based complex mathematical models. For “finger-in-the-air” future-gazing and scientific measurement of little more than column inches as we get in edtech, such predictions are worse than worse than useless. And may be actively harmful.

Simon Reynolds suggests in “Retromania” (2012) that “Fashion – a machinery for creating cultural capital and then, with incredible speed, stripping it of value and dumping the stock – permeates everything”. But in edtech (and arguably, in economic policy) we don’t dump the stock, we keep it until it can be reused to amplify an echo of whatever cultural capital the initial idea has. There are clearly fashions in edtech. Hell – learning object repositories were cool again two years ago.

Now what?

There is a huge vested interest in perpetuating the “long now” of edtech. Marketing copy is easy to write without a history, pre-sold ideas easier to sell than new ones. University leaders may even remember someone telling them two years ago of the first flourishing of an idea that they are being sold for the first time. Every restatements amplifies the effect, and the true meaning of the term “hype-cycle” becomes clear.

MOOCs are a counter-example – the second coming of the MOOC (in 2012) bore almost no resemblance to the initial iteration in 2008-9 other than the name. I’d be tempted to label MOOCs-as-we-know-them as a HE/Adult-ed specific outgrowth of the wider education reform agenda.

You can spot a first iteration of an innovative idea (and these are as rare and as valuable as it might expect) primarily by the absence of an obvious way by which it will make someone money. Often it will use established, low cost tools in a new way. Venture capital will not show any interest. There will not be a clear way of measuring effectiveness – attempts to use established measurement tools will fail. It will be “weird”, difficult to convince managers to allocate time or resource to, even at a proof-of-concept level.

So my tweetable takeaway from this session is: “listen to practitioners, not predictions, about education technology”. Which sounds a little like Von Hippel’s Lead User theory.

Data Sources

NMC data: summary file, Audrey Watters’ GitHub, further details are from the wiki.

Gartner: Data sourced from descriptive pages (eg this one for 2015). Summary Excel file.

ALTC programmes titles:

2004, 2005, 2006, 2007, 2008, 2009, 2010, 2011, 2012, 2013, 2014, 2015 (incidentally, why *do* ALT make it so hard to find a simple list of presentation titles and presenters? You’d think that this might be useful for conference attendees…)

![Screen-Shot-2015-06-29-at-17.53.36[1]](http://followersoftheapocalyp.se/wp-content/uploads/2015/09/Screen-Shot-2015-06-29-at-17.53.361-300x67.png)

Don’t tell them to grow up and out of it – they’re quite aware of what they’re going through.

@dkernohan thanks for this!

When Maren asked me to update a presentation that I made in 2015 – which rejoiced in the title “’I watch the ripples change…